Posted 30 марта 2023, 11:58

Published 30 марта 2023, 11:58

Modified 30 марта 2023, 14:15

Updated 30 марта 2023, 14:15

The neural network has already caused an earthquake. Scientists urged to suspend the creation of AI

Novye Izvestia has already reported that the scientific community is extremely concerned about the pace of the introduction of artificial intelligence. And the problem here is not that AI based on neural networks will learn to think by itself, but that the neural network, according to experts, accelerates most of the ongoing social processes too much, and this will inevitably lead humanity to a dead end, only during this time they will have time to break a huge number of lives.

That is why a letter published by the non-profit organization Future of Life is widely distributed in the media and social networks, in which the head of SpaceX, Elon Musk, Apple co-founder Steve Wozniak, philanthropist Andrew Young and about a thousand other artificial intelligence researchers called for "immediately suspending" the training of AI systems "more powerful than GPT-4".

The letter says that artificial intelligence systems with "human-competitive intelligence" can carry "serious risks for society and humanity." It calls on laboratories to suspend training for six months. During this time, the industry must develop a set of rules, according to which it undertakes to use AI in the future. Otherwise, the world may face global risks: competition of neural networks in the labor market, skillful fakes in social networks and loss of control over civilization:

"Powerful artificial intelligence systems should be developed only when we are sure that their effects will be positive and their risks will be manageable," the authors of the letter emphasize.

More than 1,125 people signed up for the appeal, including Pinterest co-founder Evan Sharp, Ripple co-founder Chris Larsen, Stability AI CEO Emad Mostak, as well as researchers from DeepMind, Harvard, Oxford and Cambridge. The letter was also signed by the heavyweights in the field of artificial intelligence, Joshua Bendjio and Stuart Russell. The latter also called for suspending the development of advanced AI until independent experts develop, implement and verify common security protocols for such AI systems..."

The truth can no longer be distinguished from fiction

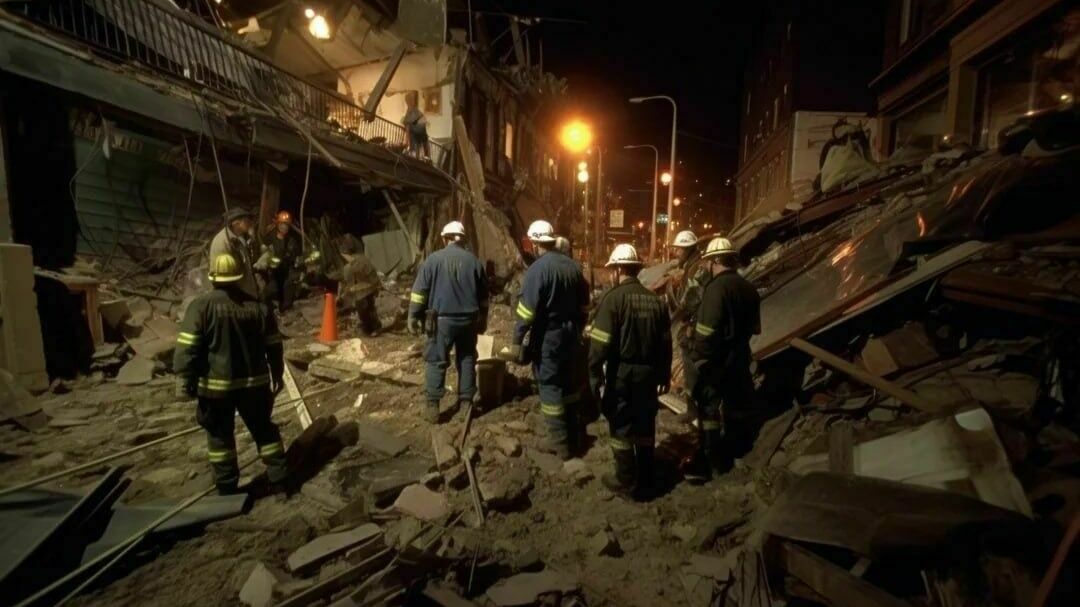

The seriousness of the situation is eloquently evidenced by photographs of the consequences of the Great Cascadia earthquake that occurred 22 years ago in Oregon, which are published by the channel "Caution, News". Then a natural disaster claimed the lives of 8 thousand people, 785 thousand people were left homeless.

But there is one caveat — all this did not happen. Photos and details of the incident were generated by enthusiasts from the English-language forum Reddit using artificial intelligence. You can identify the trace of AI by minimal errors — for example, the unnatural position of the girl's hand in the last photo.

All this was done using the Midjourney neural network, which allows you to generate images using text. Reddit users were wondering if they would be able to create authentic photos of a fake event in the style of documentary chronicles. Then the enthusiasts started using Chat GPT to create details and details. As a result, it became at least difficult to distinguish truth from fiction without preparation: many forum users at first even pretended that they remembered something about that disaster. The adventure was a success, and soon stories about the "blue plague in the USSR of the 70s" and the "solar superstorm of 2012" appeared on Reddit - also with a Midjourney image and tons of generated text.

The neural network approach will not solve social problems

Network analyst Fyodor Kuznetsov comments on these events as follows:

"About the notorious letter of one thousand hundred with a call to delay the training of generative models for six months due to the unpredictable final power of artificial intelligence.

- There is plenty of intelligence in such models, but naked intelligence without consciousness and, moreover, self-awareness is generally harmless.

Yes, the problem of relevance of the answers of popular Transformer-type models in the household case has not been solved - but precisely because the risks of using the results at the household level are quite small. And at a serious level, careful verification of the results is obviously provided for - and the risks are also insignificant, although for a different reason. So in general, the danger from such models is quite low. For some reason, the authors of the letter did not take this into account;

- The nature of consciousness and self-awareness in the roughest form is already clear - the Westlin-Barrett manifesto in February 2023 confirms this (I spoke about the same model back in 2017). However, the prospect of implementing any serious model of consciousness/self-awareness is very vague. No, of course, thousands of MVPs will be made now - but it will still be far from something worthy. It's not about 5 or even 10 years. 25-30 years, no less. Why such a deadline? There is the main problem: it is completely unknown how many sensors there are and, accordingly, how many levels of logical processing of signals from these sensors are sufficient to obtain consciousness approximately at the human level. It will take a very long time to experiment.

But when this problem is solved, then there really will be a danger of getting a strong AI, and radically different from sapiens. Neural networks are by definition nonlinear adders - and by definition, uncontrolled, terrible nonlinearity in fairly complex neural network models will sooner or later come out sideways.

What could be the way out? Personally, I tend to the deadlock of the neural network approach. Perhaps, in order to circumvent the black box problem, we are waiting for the revival of alternative "traditional" AI paradigms in the near future".

Neutralization mechanisms are urgently needed

Another analyst, Dmitry Chugunov, is not so optimistic, he agrees with the authors of the letter and also advocates an immediate solution to this problem:

Together with my consciousness, those who are younger by 20-30-40 years can easily write me down as old farts or boring mold.

And here it is interesting to deal with the question, what kind of appraisers are they and what is their mind-base or simpler, what kind of experience do they have, well, or IQ, finally.

Why am I suddenly talking about such an entity?

I just remember the basic rule (like Chapek's "Three Laws of Robotics"), when you make a large and dangerous system of any type, you have to provide it with unbreakable fuses.

Let's remember Chernobyl.

On the option even when the system suddenly wants to neutralize these fuses itself.

Now these young people have released a system in which there are no fuses, and the lack of reason drives them further.

Moreover, they shout loudly in a fever and protest when they want to slow down.

Here I observe the unclouded Dunnig-Kruger syndrome and the pursuit of not only fame, but also money.

And the situation needs to be dealt with urgently, because it looks like a global bomb, which has a clockwork mechanism started, and there are no fuses and stop systems.

A few "old farts" like me wrote a letter about suspending the bomb just to sort out the situation.

And the universal hype rose, according to the type of picket vests from Odessa, and the story about the tram.

I am for turning on the brakes and not because I see the most serious signatories of the braking letter, and without Elon Musk.

Because I see that the neutralization mechanisms are not made in the new bomb.

Musk has a conflict of interest in the case (the main AI developer left him) but there are other signatories..."

This situation does not threaten Russia in any way

And network analyst Anatoly Nesmian drew two witty conclusions from this situation:

"It is also interesting here that AI will be a threat only to those people who live or come into contact with the technological order in which AI is able to exist. Those who live outside of this way of life, the further away from it, the less they will be exposed to risks.

From what has been said, two conclusions can be drawn. The first is that Musk and those who joined him are absolutely right when they say that there is an urgent need to develop a basic ethics of interaction with AI. The fact that it needs to be not just developed, but introduced is an organizational issue and, by the way, monstrously complex, since without global control (which does not exist today, and if it appears, then in the promoted versions it looks very much like a creepy and nightmarish concentration camp) — in general, without global control, to introduce and accompany this ethics is impossible.

The second conclusion is that AI poses little threat to people living in the fifth, fourth and lower orders. Russia, with its wild territory and the fall in the Middle Ages, the threat from AI the further, the more it looks very hypothetical. The Dugin staples of AI are not interesting, just as the marriage dances of Polynesian aborigines are not interesting to us (well, except in a purely ethnographic sense). Most likely, AI will also not pose a threat to people living in higher than the sixth way of life, but it still needs to rise to the seventh. The battleground will be the emerging way of life following the results of the current NTR. Therefore, it is possible to avoid the threat — but only if you remain in the wild, or vice versa — to start now, skipping the sixth way and aiming immediately at the seventh. This is not easy, it is impossible to achieve this "on a broad front", but it is theoretically achievable to break through to it in certain directions..."

It is characteristic in this regard that Igor Ashmanov, a member of the Human Rights Council under the President of the Russian Federation, called for blocking artificial intelligence in Russia just in case:

"The arguments of experts about the fact that it's nothing terrible, we just need an ethical code for the use of AI, that we just need regulations, legislative restrictions at the international level are irrelevant, because the owner of the US technology is not going to impose any restrictions, considering himself a leader. Why should a leader limit himself?

Yes, we urgently need to develop an understanding of the problem at the state level, develop the state will, introduce restrictions, come up with a technological response - not only symmetrical ("right now we'll do this too"), but also asymmetric - control, blocking, etc. And to realize the huge military threat behind all this.

It is impossible to say more now in essence: it is necessary to launch the process of awareness of this bundle of threats at the national level."